Enlarge / MLPerf offers detailed, granular benchmarking for a wide array of platforms and architectures. While most ML benchmarking focuses on training, MLPerf focuses on inference—which is to say, the workload you use a neural network for after it's been trained. (credit: MKLPerf)

When you want to see whether one CPU is faster than another, you have PassMark. For GPUs, there's Unigine's Superposition. But what do you do when you need to figure out how fast your machine-learning platform is—or how fast a machine-learning platform you're thinking of investing in is?

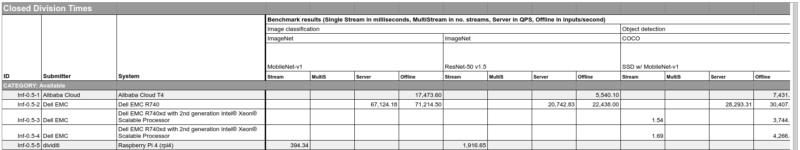

Machine-learning expert David Kanter, along with scientists and engineers from organizations such as Google, Intel, and Microsoft, aims to answer that question with MLPerf, a machine-learning benchmark suite. Measuring the speed of machine-learning platforms is a problem that becomes more complex the longer you examine it, since both problem sets and architectures vary widely across the field of machine learning—and in addition to performance, the inference side of MLPerf must also measure accuracy.

Training and inference

If you don't work with machine learning directly, it's easy to get confused about the terms. The first thing you must understand is that neural networks aren't really programmed at all: they're given a (hopefully) large set of related data and turned loose upon it to find patterns. This phase of a neural network's existence is called training. The more training a neural network gets, the better it can learn to identify patterns and deduce rules to help it solve problems.

Read 10 remaining paragraphs | Comments

https://ift.tt/2qwitLx

Comments

Post a Comment