Enlarge / Intel's "Mega Trends in HPC" boil down to AI workloads, running on many kinds of hardware, largely in cloud—not on-premise—environments. (credit: Intel Corporation)

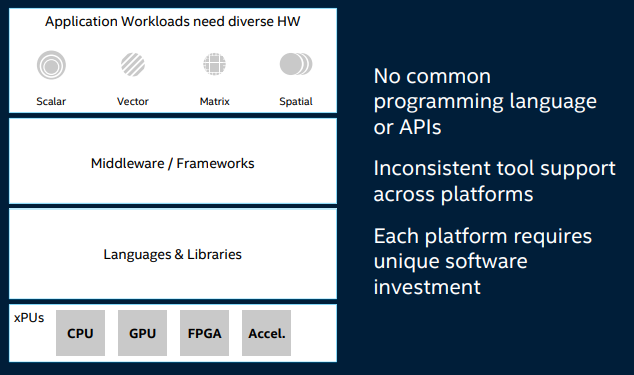

Saturday afternoon (Nov. 16) at Supercomputing 2019, Intel launched a new programming model called oneAPI. Intel describes the necessity of tightly coupling middleware and frameworks directly to specific hardware as one of the largest pain points of AI/Machine Learning development. The oneAPI model is intended to abstract that tight coupling away, allowing developers to focus on their actual project and re-use the same code when the underlying hardware changes.

This sort of "write once, run anywhere" mantra is reminiscent of Sun's early pitches for the Java language. However, Bill Savage, general manager of compute performance for Intel, told Ars that's not an accurate characterization. Although each approach addresses the same basic problem—tight coupling to machine hardware making developers' lives more difficult and getting in the way of code re-use—the approaches are very different.

-

In this simplified block diagram of AI/ML development, Intel wants to isolate "Languages & Libraries" as the biggest pain point. [credit: Intel Corporation ]

When a developer writes Java code, the source is compiled to bytecode, and a Java Virtual Machine tailored to the local hardware executes that bytecode. Although many optimizations have improved Java's performance in the 20+ years since it was introduced, it's still significantly slower than C++ code in most applications—typically, anywhere from half to one-tenth as fast. By contrast, oneAPI is intended to produce direct object code with no or negligible performance penalties.

Read 6 remaining paragraphs | Comments

https://ift.tt/32TKcmR

Comments

Post a Comment