-

Turns out that Huang was cooking eight A100 GPUs, two Epyc 7742 64-core CPUs, nine Mellanox interconnects, and assorted oddments like RAM and SSDs. Mmmm, just like Grandma used to make. [credit: Nvidia ]

This morning, everybody found out what CEO Jensen Huang was cooking—an Ampere-powered successor to the Volta-powered DGX-2 deep learning system.

Yesterday, we described mysterious hardware in Huang's kitchen as likely "packing a few Xeon CPUs" in addition to the new successor to the Tesla v100 GPU. Egg's on our face for that one—the new system packs a pair of AMD Epyc 7742 64-core, 128-thread CPUs, along with 1TiB of RAM, a pair of 1.9TiB NVMe SSDs in RAID1 for a boot drive, and up to four 3.8TiB PCIe4.0 NVMe drives in RAID 0 as secondary storage.

Goodbye Intel, hello AMD

-

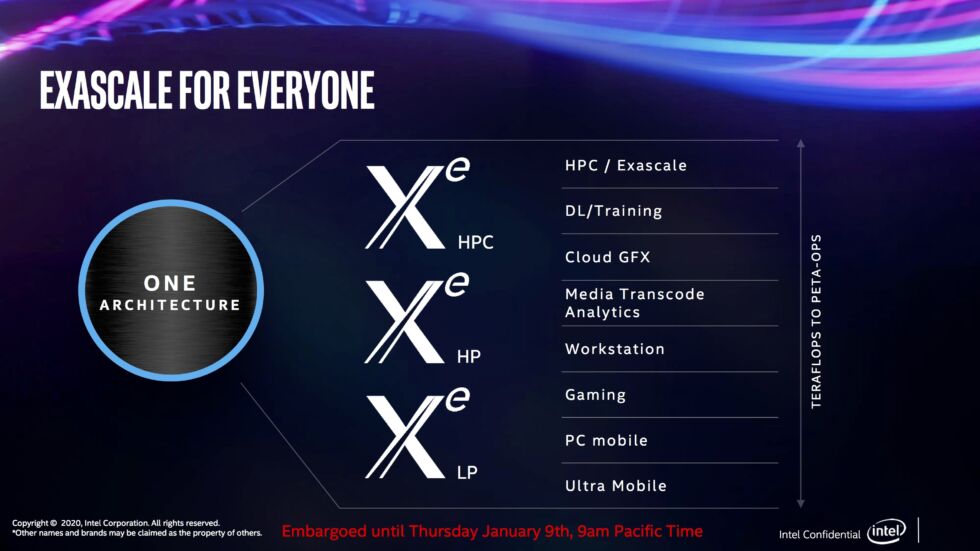

It seems entirely likely that Nvidia didn't want to monetarily support Intel's plans to muscle in on its own profitable deep-learning turf. [credit: Intel Corporation ]

Technically, it shouldn't come as too much of a surprise that Nvidia would tap AMD for the CPUs in its flagship machine-learning nodes—Epyc Rome has been kicking Intel's Xeon server CPU line up and down the block for quite a while now. Staying on the technical side of things, Epyc 7742's support for PCIe 4.0 may have been even more important than its high CPU speed and massive core/thread count.

Read 7 remaining paragraphs | Comments

https://ift.tt/2Ta9R8P

Comments

Post a Comment