Enlarge (credit: Sean Gallup | Getty Images)

Large language models (LLMs) like ChatGPT and Google Bard can provide some decent answers to certain types of questions, but these computers are ironically pretty bad at computing stuff. Google has a new solution to try to get language models to do simple tasks, like math, correctly: have the AI write a program. Google says that now when you ask Bard a "computational" task like math or string manipulation, instead of showing the output of the language model, that language model will instead write a program, execute that program, and then show the output of that program to the user as an answer.

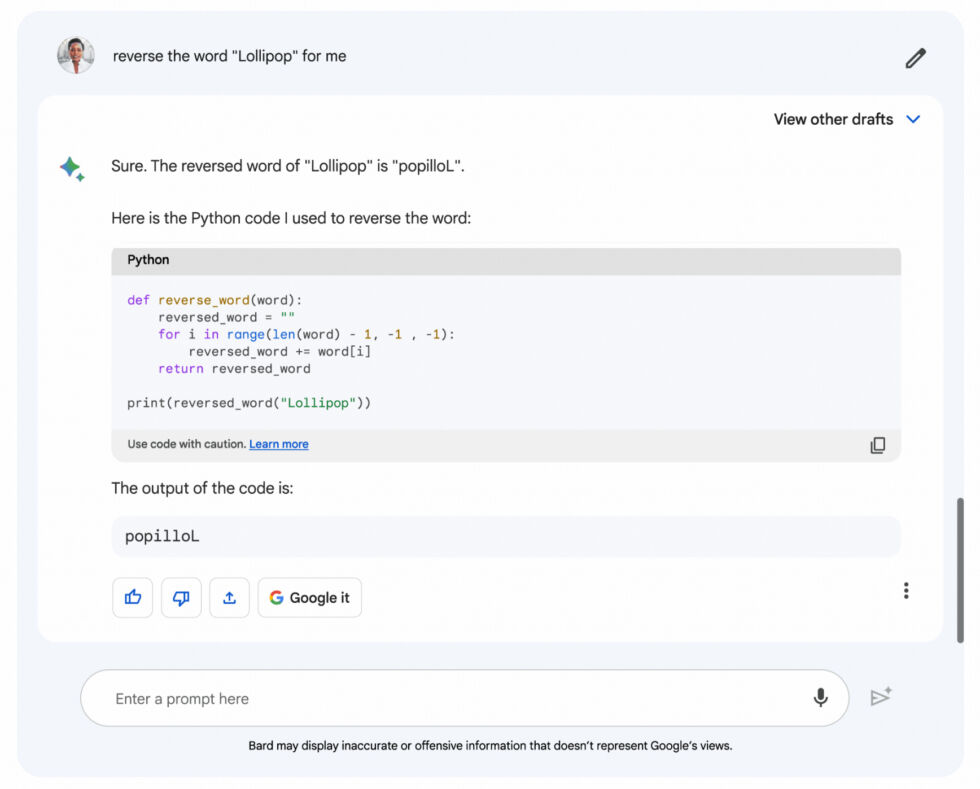

Google's blog post provides the example input of "Reverse the word 'Lollipop' for me." ChatGPT flubs this question and provides the incorrect answer "pillopoL," because language models see the world in chunks of words, or "tokens," and they just aren't good at this. Here is Bard's example output:

It gets the output correct as "popilloL," but more interesting is that it also includes the python code it wrote to answer the question. That's neat for programming-minded people to see under the hood, but wow is that probably the scariest output ever for The Normals. It's also not particularly relevant? Imagine if Gmail showed you a block of code when you just asked it to fetch email. It's weird. Just do the job you were asked to do, Bard.

Read 3 remaining paragraphs | Comments

https://ift.tt/icsv2O4

Comments

Post a Comment